#Motivation

As I write this, it’s winter break! I’m back home and, along with sleeping in and grinding leetcode, I’ve been catching up on my video game backlog. One of my favorites right now is a virtual reality flight simulator called VTOL VR (named after the type of aircraft that can perform the takeoff and landing maneuver).

An in-air T55 from VTOL VR.

There are multiplayer lobbies in the game where you’re able to talk to other pilots/air traffic controllers on the radio frequency. And while the level of realism is nowhere near what other networks such as VATSIM attempt to maintain, it’s not unheard of for radio communications to contain some of the specialized jargon used in the aviation industry. One hallmark of this lingo is the use of the NATO phonetic alphabet, which is a mapping of every letter and number in the Roman alphabet. It’s used to differentiate between letters that could be confused over a low-quality signal, such as radio or telephone. Imagine trying to tell the difference between common letters like B and D, or M and N on a radio channel filled with static.

NATO phonetic alphabet. (Source)

For example, if you’re talking to an air traffic controller, mistakenly interpreting an instruction to hold short runway 23 at *P* as hold short runway 23 at *B* could potentially put multiple aircraft in danger. Because of this, the NATO phonetic system requires that any spelling of letters is expanded into the corresponding word in the alphabet. Each letter is individually pronounced — an aircraft with the callsign N123AB would be pronounced as NOVEMBER ONE TWO THREE ALPHA BRAVO.

The issue is… I don’t play aviation-related games with comms frequently enough to have proficiency with the NATO alphabet. I’m lucky that VTOL includes the phoneticization of the callsign of your plane, because if they didn’t, I would have no clue on how to properly describe my aircraft to people on frequency.

Just for fun, though1, I’d still like to be proficient at interpreting the letters.

#The Website

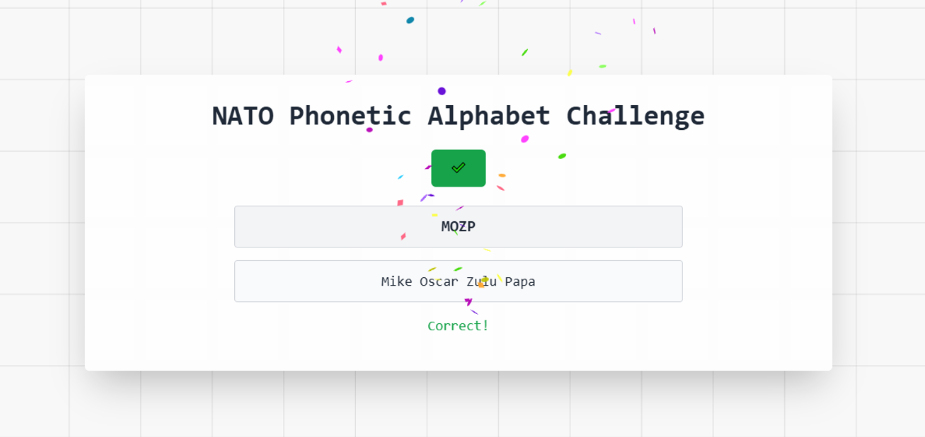

…so, I made a website. I used Astro as the framework, just because it’s what I’m most comfortable with when rapidly prototyping. The primary page contains nothing more than a few labels and a button, but that’s all I needed!

The site will give you a four letter character combination — say, JUFH, for example. It’ll then activate your microphone and listen to hear if you say the correct phoneticization (in this case, it would mark you correct if you said JULIET UNIFORM FOXTROT HOTEL). If you blank on what a specific letter stands for, there’s a hint button that you can press to see what to pronounce.2 When you get it correct, there’s a nice burst of confetti, and the site will prompt you to try another example.

The hardest part of this was the audio detection — but that wasn’t too bad! Nearly every browser (except for Firefox…3) supports the Web Speech API, which allows you to easily transcribe and synthesize text. There’s no backend (at least, none that I had to deal with, as SpeechRecognition is a native browser API). All I have to do is check if the user’s browser supports it, and, if so, initialize a few basic parameters.

1const SpeechRecognition =2 window.SpeechRecognition || window.webkitSpeechRecognition;3

4 if (SpeechRecognition) {5 const speech = new SpeechRecognition();6 speech.lang = "en-US";7 speech.interimResults = false;8 speech.continuous = true;9 ...The browser calls a speech.onresult event when the user is finished speaking, and… that’s it! All the validation is simply comparing that input with the expected result (which I generate from a dictionary of the phoneticizations).

That’s it! Here’s the link to the site, if you’d like to try it out. It’s entirely open source, so feel free to peek around if you’re curious about any of the implementation details.

#Footnotes

-

And because I’d like to be able to listen to plane spotting/ATC breakdown videos without having to pause the video and interpret the letters being said… ↩

-

Notably, pressing the hint button doesn’t automatically skip you — it still requires that you say the correct answer. This was inspired by the design pattern of Duolingo, whose team definitely has more UX experience with language learning than I do! ↩

-

Firefox has speech synthesis, which is the portion of the Web Speech API that allows for text to speech. However, because the speech recognition aspect of the API relies on offloading the data to remote servers, Mozilla hasn’t implemented support for it. The site can recognize this and displays a generic “…speech recognition is not supported in this browser” message. Yes, there are various speech recognition models that you can load into the browser’s cache with WASM, but that feels like an unproportionate amount of effort for such a small user base who won’t be receiving the intended experience. ↩